The advent of smart devices and systems with their own local AI capabilities is one of today’s broader technology trends and a sign of major digital transformation. Not only is processing data near or at the source faster, but it also reduces data movement and protects data privacy and security. On-chip AI creates a new set of challenges. The expectations for performance are high, yet the chip has to be small, lightweight, and low-power.

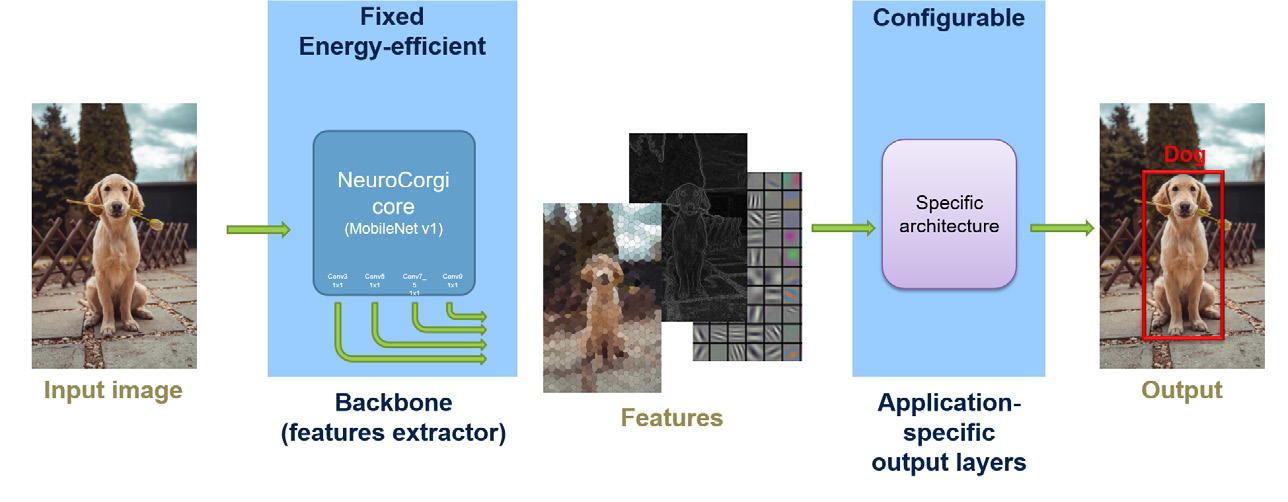

CEA-List developed a brain-inspired solution called NeuroCorgi to respond to these challenges. In the human visual cortex, the first layers of neurons are fixed fairly early on in childhood. Despite this, humans can still “learn” new objects and faces for the rest of their lives. NeuroCorgi is similar in that the feature extraction backbone is fixed at the circuit design stage, much like those childhood neurons. In the circuit, this does away with energy intensive memory access and data movement.

Transfer learning is then used to configure and optimize the output layers for a given application. NeuroCorgi, which can address multi-object classification, segmentation, and detection tasks, is optimized for mobile applications and uses less power than the sensor itself. NeuroCorgi is the first instance of a new class of AI accelerators: the Feature Extraction Accelerator (FEA). To ease the design of the current version and future ones, a tool called CorgiBuilder was developed: it generates the RTL code, a CPP simulation model and the SDK for PyTorch integration.

This one-of-a-kind circuit makes it possible to address previously inaccessible applications. It enables AI processing at the edge, with a lower energy footprint than data acquisition.

We anticipate applications leading to a reduction in the use of weedkillers and pesticides in agriculture, better area monitoring and widespread obstacle detection.

Transportation

Agriculture

Surveillance

Defense

“Fixing” a large part of the neural network is the key concept behind this very-low-power circuit. The first layers, used for feature extraction, are fixed in their entirety when the circuit is designed. Transfer learning is then used to optimize the output layers for each new application. This brain-inspired concept echoes how the human visual cortex learns.

The circuit is built with fixed feature extraction neural network, optimized for on-chip AI. Its design was assisted by an in-house learning framework, which handled parameters quantization and ASIC code generation. Compared to the current state of the art, this is a novel—and effective— approach.