Production systems will need to be flexible and agile to overcome the challenges facing the manufacturing industries. In addition to making the most of limited space and other resources, the factories of the future will also have to be able to respond to growing demand for mass customization by producing small batches—and even single units—of highly personalized products. And this means having production lines and, especially, robots, that any operator can reconfigure on the fly without any special programming skills. Because operators—and their know-how—will be at the center of these production lines, the robotic systems deployed will have to be able to work alongside humans and rapidly learn new tasks to meet new production needs. And they must also be easy to use. Around a dozen CEA-List labs are contributing to this cobotics project to gradually develop and assemble technology bricks that will be easy to integrate into future demonstrators.

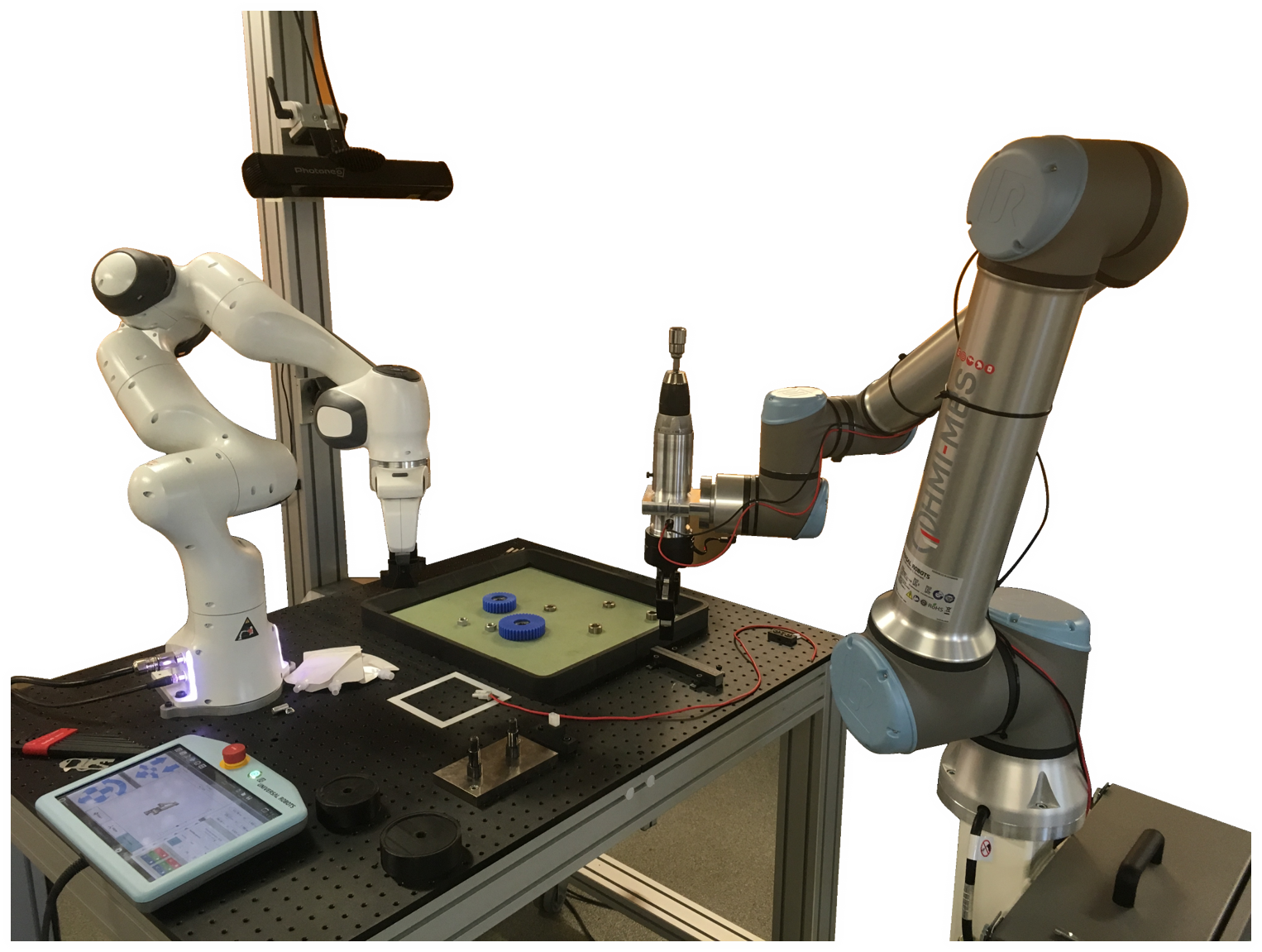

Assembly tasks are very common on production lines. But, because no two assembly tasks are exactly alike, even tasks that seem simple and repetitive can be hard to robotize. Assembly procedures can involve similar-looking parts. Or parts arranged randomly in bins. Another challenge is staying within the tolerances for assembly parameters like tightening. The first demonstrator that came out of this project was a robotic arm designed in 2021. This version identifies and locates parts using cameras combined with 2D and 3D vision technology based on AI algorithms and more conventional object registration techniques. A command-control system enables the robotic arm to follow the necessary trajectory to reach a part and to deploy the robotic skills necessary for tasks like grasping and inserting a part.

Here, the demonstrator was tested on a gear assembly task, where it successfully grasped the parts and inserted them within an assembly tolerance of 10 microns. This is a much higher degree of precision than what the cobots used in factories today can deliver. Decoupling movements in different directions and controlling the force of the robot’s effector are the secrets behind the exceptional precision.

Another area in which CEA-List scientists and engineers excel is the digital twin. These virtual production-line replicas are updated as parts are detected and located, generating the optimal trajectories for the robot to move around without colliding with any obstacles in its path.

The demonstrator also has a human-machine interface (HMI) that displays real-time information on the status of the task and alerts the human operator if needed. If the human operator intervenes, the vision algorithms analyze the operator’s behavior so the robot can learn from it.

The orchestration and engineering tools in CEA-List’s Papyrus software suite were instrumental to getting the multiple technologies integrated into the demonstrator to interact with each other so that the system could function properly. The control system was designed to be modular and interoperable so that the different technology bricks, components, and software applications could be switched in and out easily. This also facilitates adding new components, like specific tools for new tasks, or even additional robots, which can then work together on tasks.

A second demonstrator was built in 2022 to test two robots working together. One was equipped with a gripper and screwdriver. The tasks that make up the assembly process were parallelized, cutting total assembly time almost in half.

CEA-List also investigated use cases like inserting connectors or opening battery compartments to remove spent batteries. They are currently looking at disassembly processes that could be used in factories implementing circular economy principles.

The next step will be for operators with no specific programming skills to test the demonstrators. Proof-of-concept test cases involving programming basic tasks by demonstration or imitation and more complex tasks by giving instructions in natural language were completed. Future iterations of the demonstrator will integrate not only these technologies, but also reinforcement learning, both independent and leveraging feedback from human evaluators, similar to the latest conversation agents.