AI is being adopted at lightning speed across virtually all industries, making trustworthy AI a major challenge—and opportunity—for France’s economic competitiveness and industrial sovereignty. CEA-List is contributing to the Confiance.ai trustworthy AI program, part of the “France 2030” national investment plan, by developing new testing software to help make AI systems more robust. The software (AIMOS, for AI Metamorphism Observing Software) ensures that AI models are robust to disturbances. The goal is to make sure systems that use AI operate as intended, generating reliable, reproducible results in all situations.

CEA-List’s software is based on something called metamorphic testing, which tests properties like symmetry of input/output pairs (for symmetrical systems) and verifies that a blurry or over- or underexposed input image, for example, does not produce incorrect results. AIMOS was implemented on two use cases provided by industrial companies in the Confiance.ai program.

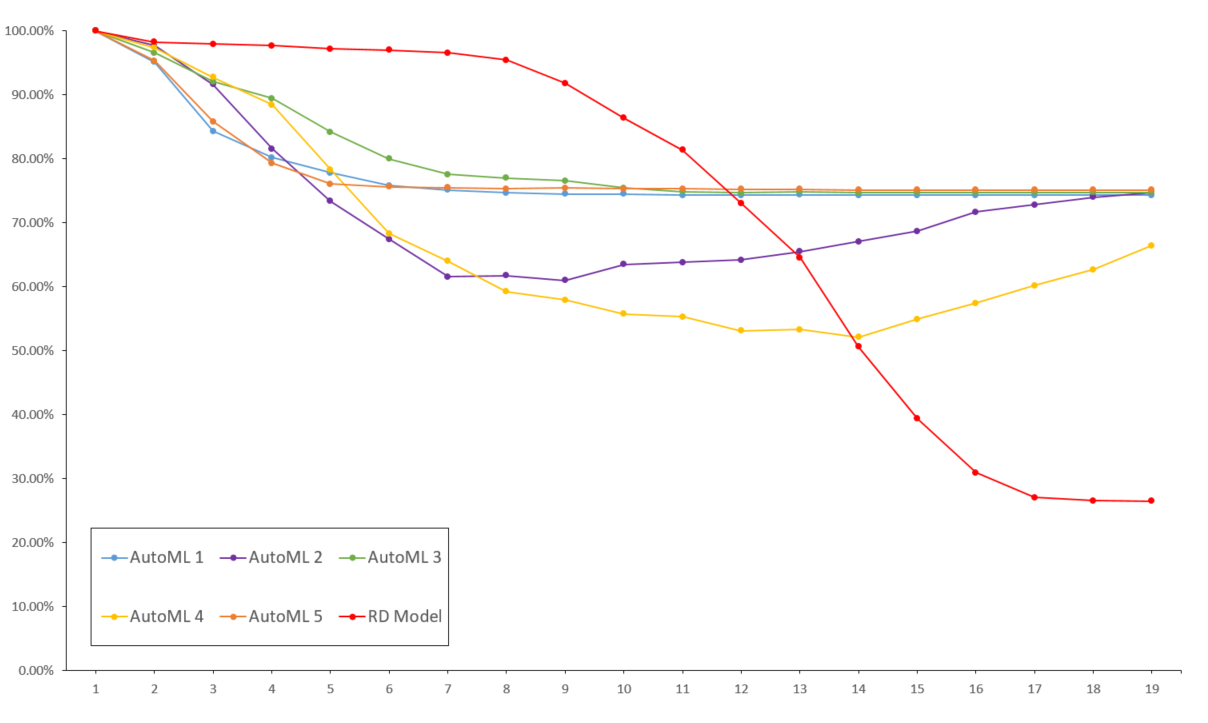

The first test case, from car maker Renault, focused on quality control of rear-axle welds based on photographs. AIMOS compared the reliability of different AI models on low-quality images. An automation was implemented to generate images of varying quality (focus, exposure, etc.). AIMOS was then used to confirm to what extent the AI models tested still produced accurate results when the “disturbances” to the inputs remained within predetermined acceptable limits. This information was then used to rank the AI models according to stability.

The second test case, on the ACAS Xu detect-and-avoid system, was provided by Airbus. AIMOS was used to test 45 neural networks developed to analyze the speeds and positions of other drones in the immediate vicinity. Here, the AIs had to predict symmetrical avoidance maneuvers for symmetrical angles of approach. The AIMOS analysis showed that, of the 45 networks, 42 were more than 95% stable. The other three were 60% to 70% stable.

These two test cases showed that AIMOS is easy to use, that the results are reproducible, and that it is applicable to very different use cases. Renault is planning to integrate the software into its quality control process. The software will undergo further development and testing on other Confiance.ai program partners’ use cases.