The purpose of the research was to design a software module that would give robots the ability to understand and execute tasks based on instructions given in natural language or provided in images. The principle is to translate intuitive interactions into specific physical actions. We integrated a generic, or foundation, transformer AI model that had been pretrained on a large dataset of robot trajectories. We then refined the model on our own data to improve performance on the target tasks.

The model we ultimately selected, Octo, adapts efficiently to various robotic configurations, requires relatively little data, and is reasonable in terms of computing resources. What makes Octo so flexible is a modular attention structure that allows the model to adjust to the specificities of the target tasks with ease. This in turn improves generalization to and performance on a wide range of robotic tasks.

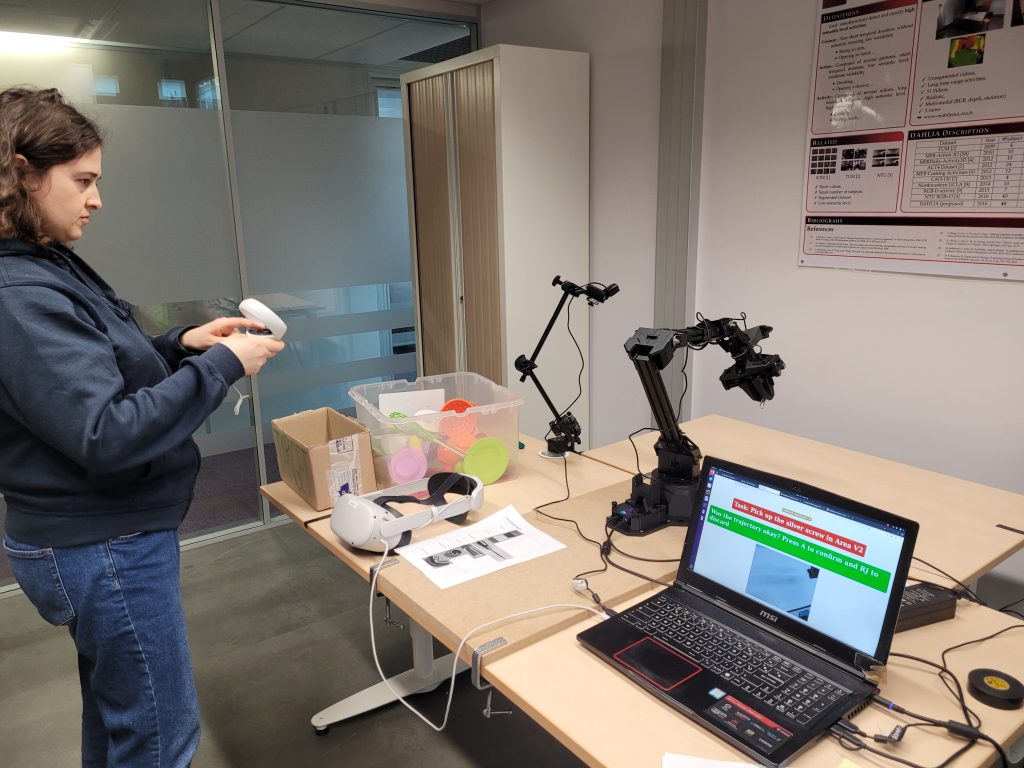

We also developed a remote operation mode to gather data specific to the robotic grasping task at hand. The system is built on a lightweight six-axis robot remote controlled using a virtual reality joystick, enabling precise, intuitive handling—essential for quality data acquisition. To generate the actual data, volunteers performed robotic grasping tasks involving a dozen objects handled in four distinct spatial configurations.

The diversity of objects and spatial configurations is important to ensure that the data is representative of real-world tasks and to give the robot an opportunity to learn across a wide variety of handling scenarios. CEA-List’s PIXANO software was used to “clean” the data gathered, correcting any annotation errors.

The Octo model was then fine-tuned using a cleaned training dataset containing 678 trajectories and a test dataset of 70 trajectories. Once trained, Octo was successful at identifying and grasping an object from the training dataset, placed either alone or with distractor objects, without a dedicated 3D perception system.

Research on more complex tasks, including bimanual object input, is currently underway.

These advances came out of our research on intuitive programming, the purpose of which is to help make robotics more accessible to operators without specialist knowledge or training.

The goal of our research is to leverage artificial intelligence to develop robotic systems that are robust, accessible, and rapidly deployable in industrial settings.