Digital technology is something we depend on for many of our activities—and something we have come to take for granted. For people with visual impairments, however, these everyday tools are far from optimal. Accessing digital content—especially when it includes visual elements like images or graphics—can be hard. Text-to-speech and other workarounds are not very sophisticated or interactive and do little to remove barriers for people who have both visual and hearing impairments.

CEA-List sensory and ambient interface experts designed an innovative display with haptic feedback that could fill this gap. Local vibrations, which the user can feel as they interact with or explore the surface of the screen, provide context-enriched information (i.e., information about the position of the user’s finger on the screen). The technology is also multi-touch, which means it can respond to more than one finger on the surface of the screen at the same time.

The display was developed as part of the EU Ability project. A recently-presented functional prototype—built with an OLED display for a 10-inch tablet—was used to demonstrate the feasibility of the multi-sensory technology for three use cases:

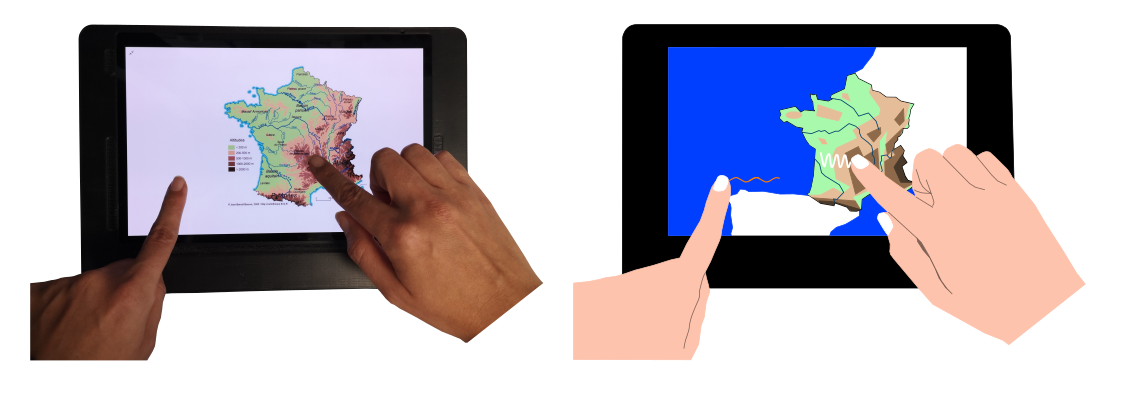

During the demonstration, users were able to explore a geographical map with their fingers and locate points of interest. ©CEA

The device is made from a matrix of piezoelectric actuators glued behind a tablet display and coupled to the display to make it vibrate.

Where the real innovation lies is in the matrix’s control algorithm, which can locate multiple inputs and respond to them with separate local vibrations at the same time. The algorithm is based on a proprietary reverse-filtering technique developed by CEA-List. It can handle up to ten fingers—spaced at least 15 mm apart—at the same time.

The innovation can also be used in two other novel ways. First, in addition to the mechanical vibrations described above, the matrix can also emit sound vibrations (at higher frequencies) to produce a truly multisensory experience. This experience will be further enhanced by the algorithm’s ability to control the directivity—in other words, the perceived position—of an audio source.

Contactless interaction with the display, which is currently being prototyped, is the second exciting use. These additional capabilities will target not only people with visual impairments, but also people, such as those with motor disabilities, who experience challenges interacting using touch. The technology could also prove useful in situations where touch interaction is impractical, like car interiors and industrial workstations.

Here’s how it works: The piezoelectric matrix emits ultrasound waves (at frequencies even higher than sound waves), and then captures the waves that bounce back off the user. By analyzing this signal, the algorithm can interpret the user’s movements and generate the corresponding response.

CEA-List is working closely with several other Ability project partners to fine-tune the technology and its planned use cases:

The purpose of the Horizon 2022-2025 Ability project is to respond to the challenge of content accessibility for people with visual impairments, including those who also have hearing impairments, by designing a complete portable solution. The technologies developed include CEA-List’s multisensory tablet and a pin-based Braille display, also based on piezoelectric actuators.

CEA-List has produced a demonstrator of this second display. Compared to similar devices available on the market, CEA-List’s technology is based on an innovative concept that requires fewer actuators, making the system more economical for distribution to a wider audience.

In addition to CEA-List, the Ability project consortium includes a research institute (OFFIS), a manufacturer of equipment for people with visual impairments (Insidevision), two end-user organizations (H-lab and LASS), a university (ULUND), and two manufacturers (Samsung and Siemens).

Learn more about the EU Ability project

The main innovation behind this technology is the use of a single actuator—the piezo matrix—to enable different display features. This advantage will open up a larger market to absorb the additional cost of integrating the technology into the display, which will help make this reliable solution—and digital content—more readily available at a lower cost to people with visual impairments.

It all started with the multi-point, localized haptic feedback display technology developed by our laboratory. We became aware of an EU call for projects around accessibility, and we thought our technology might be a good fit for this kind of multi-partner project!

We are currently finishing up the hardware and integrating the software layers. The display will then be operational. We will be completing a series of user tests in 2025 to figure out the best haptic strategies. Next, we’ll be looking at how to integrate sound in a way that is complementary to the haptic features. The idea is to create a truly multisensory tablet.