In this use case, the vehicle had to reconcile data from several viewpoints, manage wireless network fluctuations, and, ultimately, perceive its environment in order to safely navigate a parking lot also equipped with sensors. Technologies from three laboratories were implemented to address these challenges.

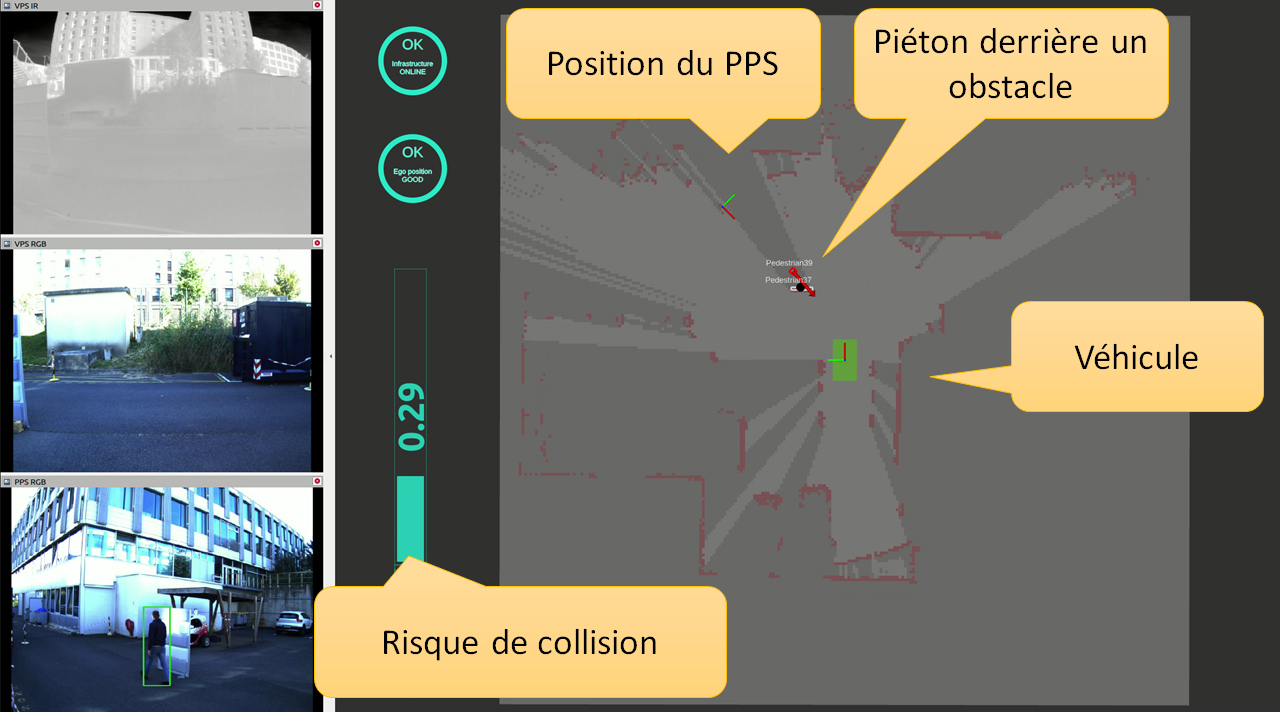

A multi-sensor processing pipeline was built (usin eMMOTEP) to detect obstacles and, especially, pedestrians, even in low light. Data from color and infrared cameras and a multi-slice LiDAR sensor are used to create an occupancy map around the vehicle. All classified obstacles are designated, and their trajectories predicted (using SigmaFusion).

The parking lot’s sensors (cameras and LiDAR) also gather data, which is used to map the fixed (walls) and moving (pedestrians, vehicles, etc.) obstacles in the environment. In order to adapt to the vehicle’s position in the parking lot and match the obstacles in the map generated by the parking lot’s sensors with those in the map generated by the vehicle’s sensors, the vehicle has to realign the two maps.

The quality of the wireless (Wi-Fi) connection between the vehicle and the parking lot fluctuates. A monitoring device was designed in PolyGraph to continuously measure communication quality in order to maintain predictable response times. If the connection is degraded or interrupted, the vehicle switches to a slower downgraded mode.

CEA-List’s Twizy (via the Carnot Network’s Carnauto institute for automotive technologies) was equipped with the prototype. A mobile tower with NVIDIA processors was set up in the Nano-Innov parking lot in Palaiseau to test the system

Demos of NeVeOS were given at CEA-List Tech Days (June 6-7, 2023) and at the Journée Outils Logiciels Matériels pour la Recherche sur les Véhicules Terrestres Autonomes at ENS Paris-Saclay (October 5, 2023).

Integrating technologies from three different labs into a single vehicle is no mean feat, which makes us even prouder to present a demonstrator that works.