The entire history of classical computing has been guided by performance measurement, with evaluation tools like the SPEC® benchmark for CPUs and the BLAS benchmark, the most-cited benchmark in the Top 500 ranking of GPUs. It is still early days in the world of quantum computing, however, and a quantum-specific benchmark has yet to emerge, making performance evaluation a crucial issue for the coming years.

The MetriQs-France quantum initiative, part of the national France 2030 plan, includes a project called BACQ, which will produce the building blocks of a future application-focused QPU evaluation method that could lead to standards at the European (CEN/CENELEC) and international (ISO/ IEC) levels.

The tests included in the benchmark will focus on optimization problems, solving linear systems, prime number factorization, and quantum simulations of N-body problems—believed to be the four cornerstones of tomorrow’s quantum computing use cases. CEA institutes List, Irig, and IphT are cooperating on these use cases.

A large amount of flexibility must be allowed in how the tests are implemented. This is to make sure the advantage of a given technology for a specific use case can be assessed while still ensuring that the test is fair. The overriding objective is to leverage the full advantages of each family of QPUs. This will also require specific expertise for each architecture. The planned approach is relevant to a wide range of QPU technologies, both gate-based and analog (i.e., quantum simulators). It will also remain robust even as QPUs advance toward fault-tolerant quantum computing (FTQC).

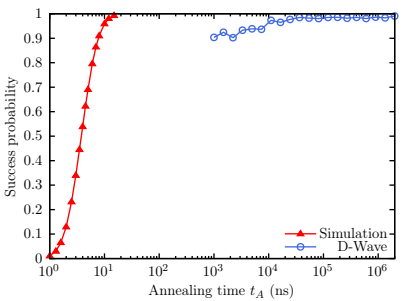

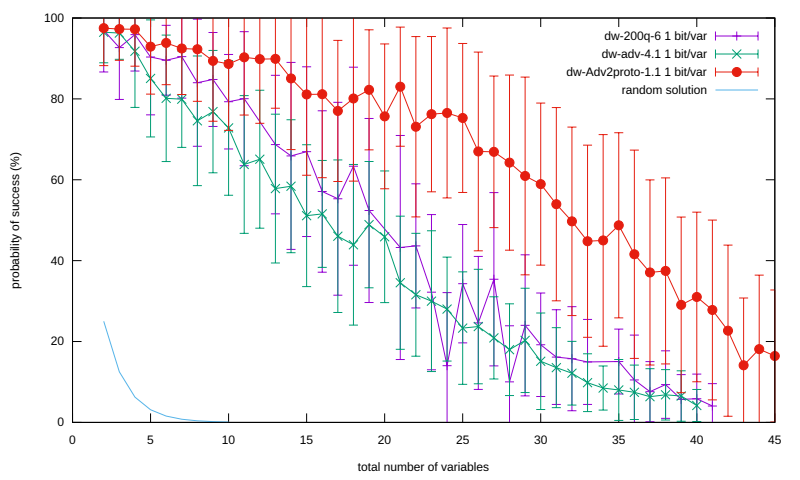

Recently, a hallmark optimization problem, maximum cardinality matching/Gn series [1], was studied in detail with the quantum computing team at ZF-Jülich, Germany’s largest HPC center. The solving of linear systems using DWave® quantum annealing [2] was also studied. A list of best practices for using DWave® QPUs was produced as a result of the first study, along with an overview of the QPUs’ capabilities and limitations for solving the problems for which they are specifically designed. The second study revealed that, for problems that these QPUs were not initially intended to address, they ultimately bring a polynomial advantage over classical computing—under certain conditions.

These results can be considered the first building blocks of a future QPU benchmark.

Fault-tolerant quantum computing (FTQC) is a prerequisite to potentially exponential acceleration. However, noisy intermediate-scale quantum (NISQ) is already creating potential polynomial acceleration in certain applications, opening the door to quantum utility (QU).

In the minds of the general public, there are a lot of myths around quantum technologies. It is up to science and, especially, metrology, to provide the information needed for people to be able to trust quantum. This is exactly what the CEA, Eviden, Thales, and Teratec are trying to do as part of this project, which will provide a framework for evaluating the performance of QPUs for specific use cases.