The safety of autonomous systems built on AI can be difficult to guarantee. The main challenges arise from the complexity of the underlying software and from the fact that deep learning is often used to create these systems. CEA-List has developed a runtime safety supervision environment that fills these gaps

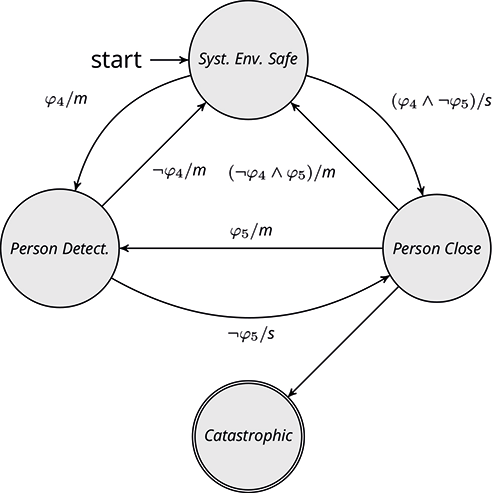

The different system runtime risks and variables (uncertainty-based confidence measurements) are identified and analyzed to produce a set of formal safety rules.

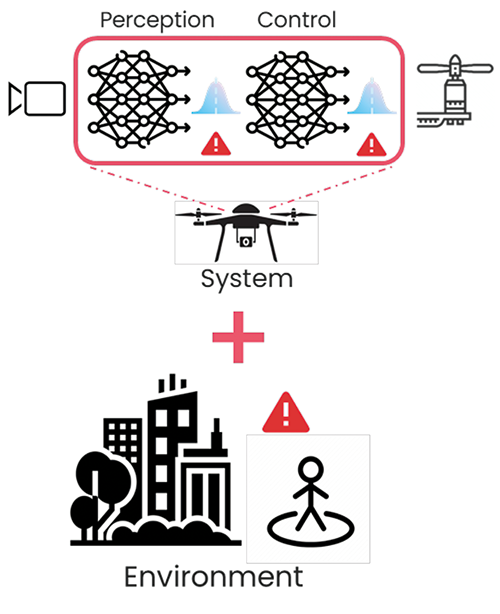

A model of safe behavior is built based on the set of rules and used to assess how “healthy” the system and its environment are. The system health assessment is based on the functional risks inherent to AIs, like processing data that diverges significantly from that used to develop the model. The health of the environment is determined by the risks present at a given point in time (Figure 1). A number of actions can be taken to maintain the system in its current state or restore it to a safe state, depending on the outcome of the health assessment. Here’s how it works: At runtime, the safety supervisor receives information from the other components of the system. This information is compared with the safe behavior model, triggering whatever action is appropriate.

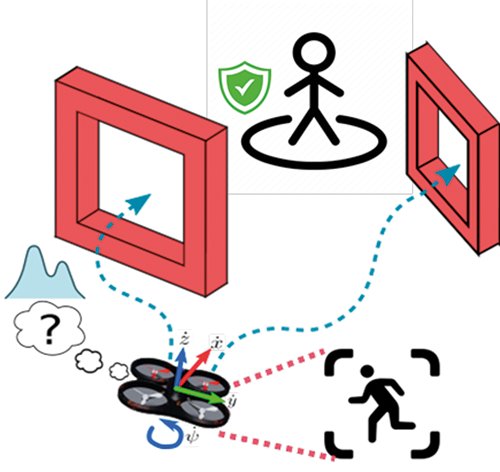

This supervision architecture was tested on an autonomous drone use case that involved flying the drone through a set of gates whose location was not known in advance (Figure 2). The AirSim simulation environment was used. The drone was represented by three distributed functional blocks, and the ROS2 standard was used for communication.

AIs deployed in modular, distributed robotic architectures can be supervised during safety-critical tasks using our framework.