Deploying AI in Edge applications is challenging. AI, with its billions of parameters, requires computing resources and power in large quantities, while Edge environments are severely constrained in terms of both size and power.

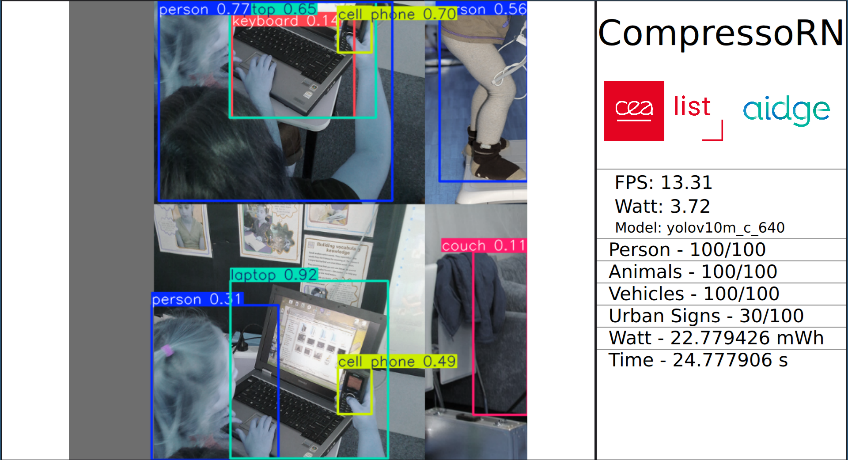

The solution is to shrink the neural networks that make up AI models. Researchers at CEA-List developed a new compression method that can make convolutional neural networks up to 60% smaller, and inference 30% faster. The method was successful on image analysis models, creating new possibilities for integrating perception algorithms into embedded systems. This could be of interest for autonomous driving, for example, where the ability of AI to rapidly process the images captured is vital.

The technology is now part of the Eclipse Aidge open-source Edge AI platform developed as part of the DeepGreen project. With this advance, manufacturers now have a new method for optimizing the sizes of their neural networks for the target hardware’s physical and computing constraints.

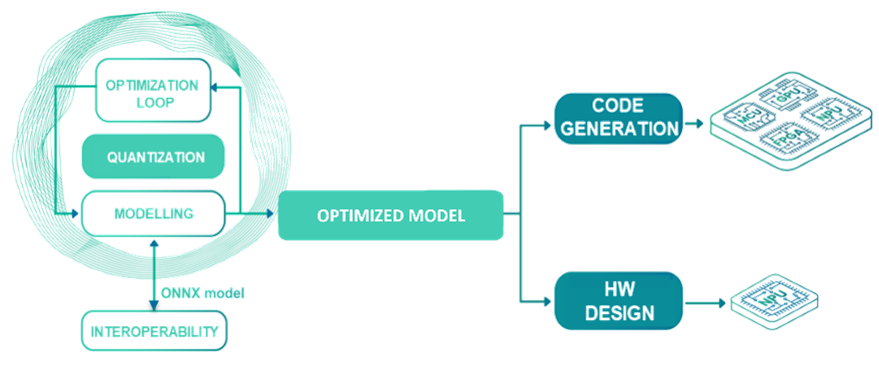

The new solution integrated into the Eclipse Aidge platform works by analyzing a specific network determined by the user. It optimizes the network compression parameters for the target hardware size, the available resources, the specifics of the target use case, and other criteria, also determined by the user. The solution then automatically generates the compressed network and exports it to the target hardware.

The software leverages low-rank factorization, an innovative method whose primary advantage is very low degradation (around 1%) of the accuracy of the results produced. Unlike simple 2D matrices, this approach can be generalized to tensors, which have more than two dimensions. The matrix representing the network nodes is decomposed into a product of smaller matrices. And fewer nodes mean less computing and memory, which in turn lowers power consumption and speeds up execution.

The tensor-decomposition compression module, based on low-rank factorization, is used in tandem with quantization-based compression, which reduces node parameter precision, in a way that takes full advantage of the two approaches’ complementarity.

Upcoming research will investigate more sophisticated ways to combine the two methods to optimize compression even more.

The DeepGreen project, led by the CEA, involves around 20 research and industrial partners from France—all united around the common goal of building sovereign, trustworthy Edge AI solutions.

The project partners created the Eclipse Aidge open-source platform, a complete Edge AI toolkit, plus a set of demonstrators that showcase sovereign technologies. CEA-List’s N2D2 environment has been integrated into Aidge.

DeepGreen is part of the France 2030 program.

The compression module integrated into Eclipse Aidge is a significant result of CEA-List’s neural network compression research.

New research avenues being investigated include how to conserve the neural network’s initial properties—especially robustness to cyberattacks—after compression. This research is underway as part of the HOLIGRAIL (HOListic Approaches to GReener model Architectures for Inference and Learning) project, part of the French national PEPR IA instrument, which funds research and research infrastructure for AI.

CEA-List researchers are also working on generalizing low-rank factorization methods to all kinds of tensors. One of their objectives is to leverage these methods to compress the neural networks used by notoriously huge and power-hungry Large Language Models (LLMs).

The compression model our people developed makes convolutional neural networks significantly smaller, without compromising on performance or robustness.”

Not only can we now shrink a neural network for deployment in a constrained environment, but, conversely, we can also determine the optimal compression ratio for a given hardware target to maximize the initial size of the network.