We took a conventional tablet and integrated localized, multi-point, vibrotactile feedback capability as well as AI algorithms for image analysis/simplification, text extraction, and predictive input.

This vibrotactile feedback is output by a matrix of piezoelectric actuators in the form of a patch, glued directly under the screen using cleanroom-compatible processes developed by CEA-Leti. The first 10.5-inch OLED tablet prototype is now ready for testing by target users.

The localized, multi-point tactile feedback, made possible by CEA-List efforts, has a wide range of practical use cases—from already-common confirmation vibrations, to button feedback that matches input force, to even more complex and rich experiences, such as textures.

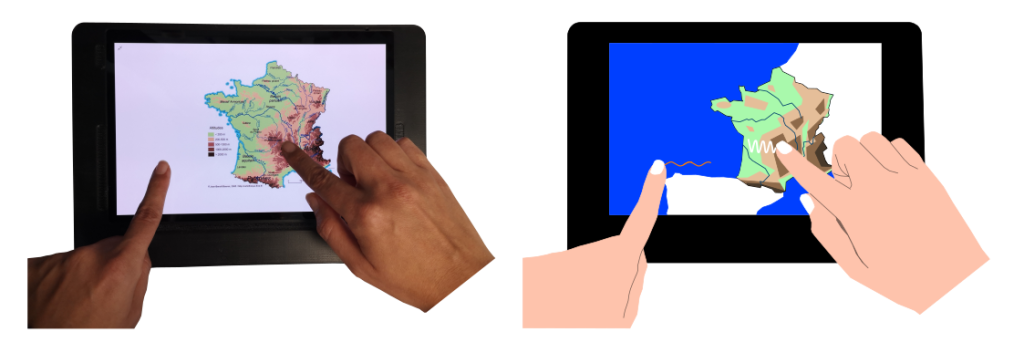

For example, a visually impaired person who wants to familiarize themselves with a travel route can pull up a map on their tablet and explore it with both hands (in particular to use a second finger as a reference point). They can feel the different elements of the map—the road, buildings, open spaces, etc.—thanks to different textures assigned to each one and triggered on contact, with an added effect of attraction toward the destination.

This multisensory tablet aims to use other visual and auditory feedback to adapt to users’ varying needs and forms of visual impairment.

+ 1 patent before the project

and 2 publications before the project