The ability to combine machine learning and numerical simulation in hybrid tools will facilitate major scientific advances in computational physics. Numerical simulation, for instance, is used to probe the microscopic properties of materials. However, this approach is currently limited by the complexity of calculating atomic energies and forces. To get around this computational complexity, we are using deep learning models to predict costly quantities, for faster simulation.

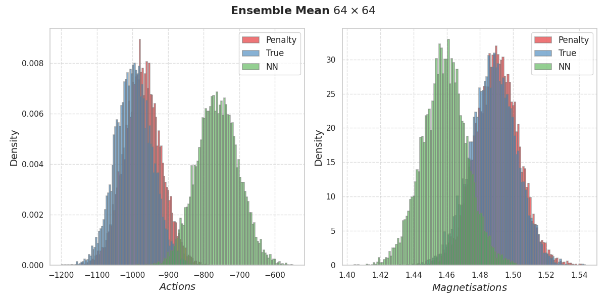

Although deep learning models do a good job at predicting, their inherent uncertainty introduces a bias into the calculation of physical observables from the simulation. This is because machine learning itself is based on a set of observations used to fine-tune a model with uncertain parameters. This epistemic uncertainty and the resulting bias currently constitute one of the main hurdles to the implementation of AI in numerical simulation.

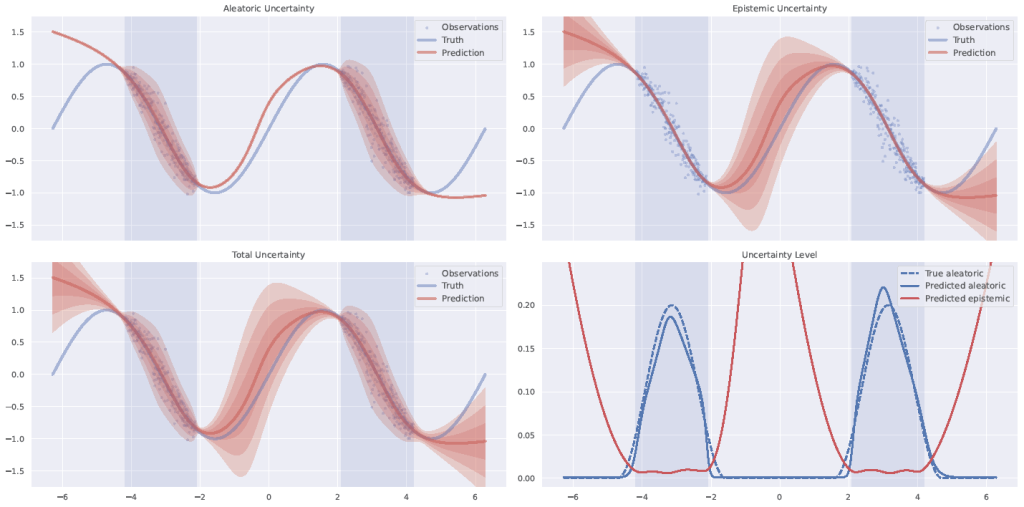

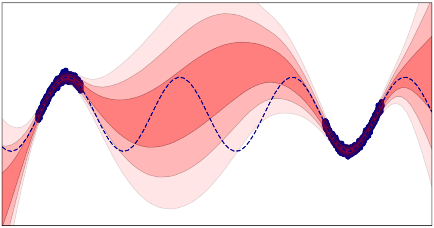

Our methods for quantitatively measuring uncertainty in predictions by neural networks are intended to support innovative new simulation-bias-correction techniques. Using Bayesian statistical inference, we are able to calculate the probability that a particular neural network setting will be correct given the limited number of observations available. This probability depends on the noise that the data may contain; this second uncertainty is called aleatoric.

The method is encapsulated in our CAUTIONER (unCertAinty qUanTificatIOn Neural nEtwoRk) software, which can calculate the uncertainty of a neural network by transforming it into a Bayesian neural network, which is defined by a distribution of probable weights and biases. This new model cannot be “trained” using conventional algorithms. CEA-List researchers drew on our extensive know-how in probabilistic programming to develop CAUTIONER, which is based on Markov Chain Monte Carlo (MCMC) methods—the gold standard for calculating predictions and their uncertainty. CAUTIONER is available from the CEA in the form of a Python library with all APIs, documentation, and a graphical interface to facilitate its use on GPU-based clusters.

We are also developing new probabilistic deep neural networks with a Bayesian Last Layer (BLL), a version of the classic Bayesian neural network in which only the last layer is probabilized. This offers the unique advantage of enabling the evaluation of prediction uncertainty—which can be calculated analytically—by design. This led to the development of a powerful EM (Expectation Maximization) optimization algorithm for learning neural network parameters.

With uncertainty correction tools like these, bias corrections for the numerical simulations mentioned above can be designed, opening the door to scientific advances in computational physics and materials science.