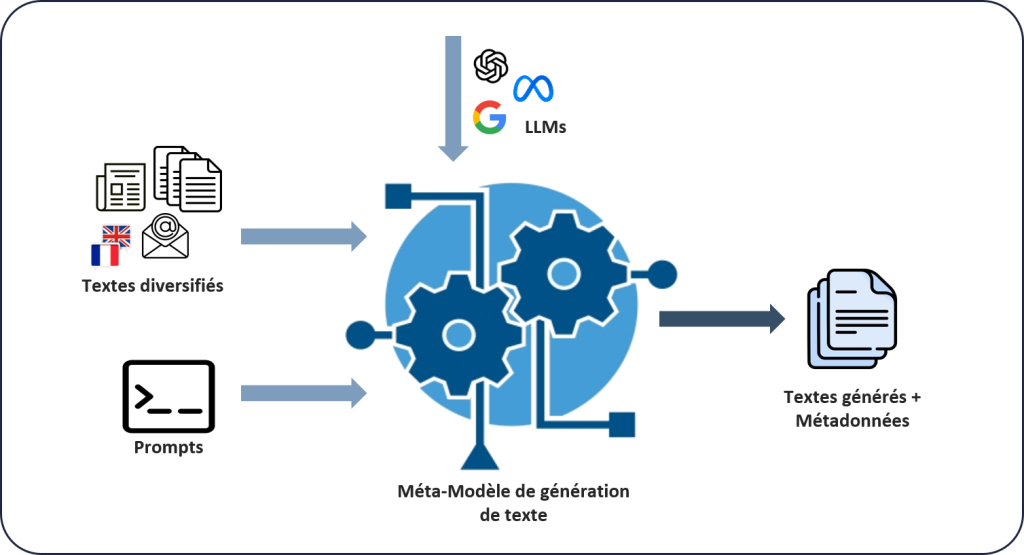

The text generation system (Figure 1) integrates a generation meta-model compatible with state-of-the-art LLMs. It ingests source texts in French or English and produces similar outputs (models used: Llama2 7B Chat, Flan-T5 XXL, Bloomz 7B1 Mt, Falcon-7B Instruct, GPT4All 13B Snoozy, and OpenAI GPT-3.5).

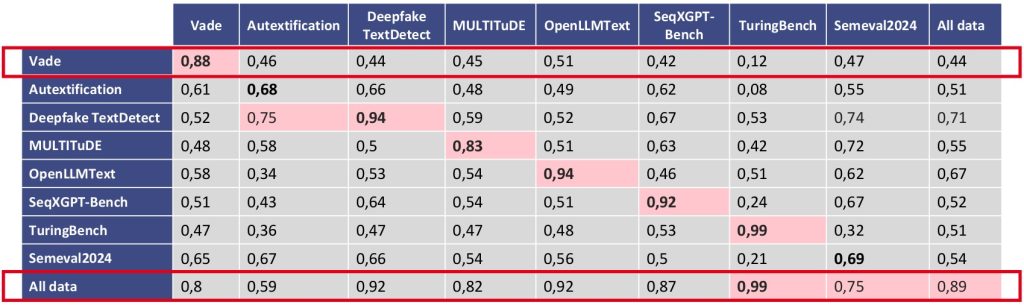

The detection system (Figure 2) identifies AI-generated text using a “black-box” approach applicable to both opensource and proprietary models. Built around a fine-tuned multilingual model, it classifies input text and assigns a confidence score. Bidirectional transformer models proved more effective for this task than autoregressive models. In F1-score performance, mDeBERTa V3 almost always outperformed mBERT and XLM-RoBERTa.

To generalize the system across diverse datasets, experiments focused on mDeBERTa V3, with F1 scores detailed in Figure 3. These results validate the black-box approach’s effectiveness and highlight the critical role of diversified training data in enhancing detection robustness.